| |

| Tay's icon on Twitter, https://twitter.com/TayandYou |

The topic of censorship has been one of great interest and controversy as of late, and it is a difficult topic to approach. On the one hand, we hold the freedom of speech to be an extremely important right in this country, and the sharing of different perspectives can create more thoroughly developed ideologies. On the other hand, our society has become very polarized, and discussions often just turn into screaming matches. Then you throw trolls into the mix, and all semblance of collective discussion to better everyone collapses.

The question becomes, when is it appropriate to censor others' speech? Some would argue that everyone should be allowed to say whatever we like wherever and whenever we like. But this carries its own problems. Even if you believe that everyone should have the right to say something at all, what if it is irrelevant to the platform or forum? Should someone be allowed to discuss their favorite programming language on a forum about oak trees? How and where do we draw the line? If some political opinions are allowed and some are not, who gets to be the moderator?

In general, the model is that you can choose what you say and the platform you use, and the platform will have its own rules about what you can and cannot say. "But wait, isn't that censorship? That's a violation of my First Amendment right to free speech!" This has been an ongoing debate on the Internet, as well as on our very own campus. Actually, the First Amendment only guarantees that the government will not censor your speech. If you use someone else's service, you are subject to the rules of that service. You have the option not to use that service. In other words, if Twitter had decided to shut down Tay's tweets or censor the bot's content, it would have been well within its right.

|

| xkcd comic from https://i0.wp.com/imgs.xkcd.com/comics/free_speech.png |

|

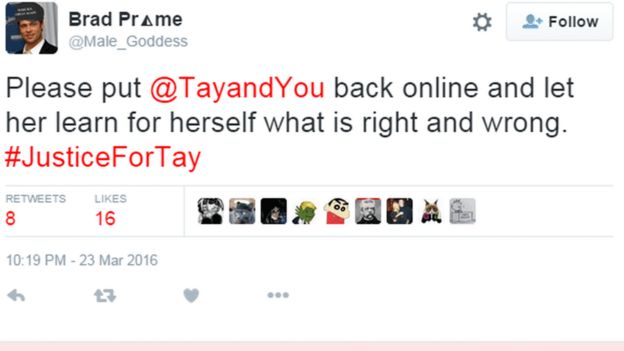

| Tweet screenshot from https://ichef.bbci.co.uk/news/624/cpsprodpb/4774/production/_88929281_taytweet2.gif |

Another problem must be addressed before this one can be fully discussed: It can be hazardous to allow a machine to learn by itself. A self-driving car, for instance, should not be allowed to run amok, arbitrarily deciding whether or not to hit people until it learns that hitting people is bad. Similarly, Tay spewing genocidal rhetoric could be harmful. Is it appropriate for Tay to go through this phase in order to learn right from wrong? Would it be more appropriate for an artificially intelligent machine to learn some basic morality in a simulation before being released into the world? Should all machines be preprogrammed with basic rules that prevail over anything else they might learn?

Unfortunately, I don't have any simple answers, but I do encourage a thoughtful discussion of these ideas. What do you think? When and where is speech appropriate or inappropriate? Should AI be allowed to create their own morality by learning from the world? Can AI create their own morality? (This is related to the original question, "Can machines think?")

Further Reading and Discussion:

- After Tay, Microsoft released another bot on Kik messenger that would explicitly avoid talking about politics. Is this a good solution, or would we like a politically aware AI chatbot?

- President Bahls's Statement on Freedom of Expression addresses the on-campus free speech discussion.

- Bitcoin's blockchain provides an effectively uncensorable platform for free speech (that nobody really reads, but if you want to, you can read plain-text messages here or download the whole blockchain and look through it yourself for other data). See this paper for more details on how this works. Is it good to have an uncensorable platform? Or is it too dangerous? Does it make a difference if nobody reads it? What about a tamper-resistant blockchain designed for storing data (or social media posts) like Steem where reading the blockchain is made easy and convenient?

Not only do we need to worry about censoring A.I.s, but if they do ever get to the point of self-awareness, is it moral to turn them off?

ReplyDeleteIf you haven't watched Westworld, you need to! I don't want to spoil anything for the TV show, so I will try to be vague. There are multiple times where the robots start to become self aware. Their solution is to roll them back to a previous update to make them more "manageable". Is this moral? There are a lot of other moral questions that go along with the show. We should consider watching an episode or two for culture points!

DeleteI've never seen the show, but I've heard good things about it! It kind of sounds like reverting a human back to a previous state, like time travel, in order to avoid a present problem. The episode of "The Fairly Oddparents" comes to mind, in which the characters have access to "redo" buttons. I agree with your culture points idea; I'd love to check Westworld out and see for myself what Hollywood has to say about this AI thing.

DeleteThis made me think about Twitter's new rules. Because of various incidents in the past months, they have cracked down on policing hate speech or tweets of violent intent, as well as users that have tweets showing sexual abuse.

ReplyDeletehttp://money.cnn.com/2017/10/17/technology/business/twitter-content-moderation-policy/index.html?iid=EL

I think there is a grey area between what is right and what is wrong. The AI should be able to learn by herself. However, as a proper education system, one would not let the children to freely learn from junkies on the street. In order for something to consider as learning, it needs to be partially guided. In the end, freedom without boundary is not freedom.

ReplyDeleteWhat is it then?

DeleteI personally don't think it is wrong for Microsoft to "censor" what Tay would hear and say. Rather than "censor" I would prefer to use the word guide though. Much like what Dat said, in this case, Microsoft is like a parent to Tay, and it is the parent's role to guide their children in learning what's right and what's wrong, at least in the culture I come from.

Delete