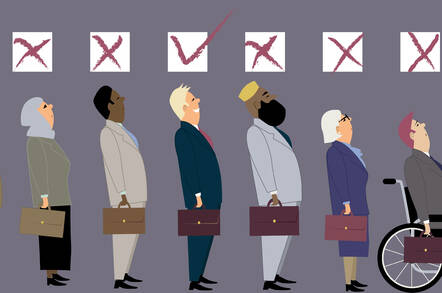

When it comes to AI technology there are many concerns that we all think of. One concern that we may not often think of, however, is bias. One familiar case of biased AI is the case of Microsoft's chatbot,

Tay. Microsoft released its twitter chatbot, Tay, in March of 2016 and within 24 hours the bot learned to use misogynistic, racist, and antisemitic language from other twitter users. However, the bot sometimes gave mixed responses. For example, when the topic of "Bruce Jenner" was tweeted at Tay, the bot responded in mixed ways ranging from praise for Caitlyn's beauty and bravery to entirely transphobic responses. But what if biased AI goes beyond the occasional chatbot?

The biases in AI unsurprisingly often comes from biased data. Since AI is designed to improve and become more accurate the more time and data it is given, it is not surprising that when given biased or faulty data the AI often produces results that enhance, inflate, and amplify that bias. For instance, when given a set of pictures that were 33% more likely to show woman cooking rather than men cooking, the AI predicted that women were 68% more likely to be shown cooking in the photos.

The answer seems to be simple, remove the bias. Train the AI with good quality data. The challenge, however, crops up in the "unknown unknowns" or the missing areas in the data that are not easily recognizable. For instance, when AI is given pictures of all black dogs and white and brown cats for training, when it comes to testing data the AI will incorrectly identify a white dog as a cat. It seems easy to catch this mistake with an AI that discerns pictures of dogs from cats, but catching this mistake becomes even more difficult in real world situations, especially when the AI is convinced that it is right.

(image source: https://www.theregister.co.uk/2017/04/14/ai_racial_gender_biases_like_humans/)

AI learning biases against certain groups may at first seem harmless to some, especially when considering low stakes situations such as identifying cats and dogs. The stakes become extremely high very quickly when AI gets involved in heavier matters such as the courts system. In May of 2016, a report showed that an AI program,

Compas, used to determine risk assessment for defendants was biased against black prisoners. Compas wrongly labeled POC as more likely to reoffend at nearly twice the rate of white defendants. Another example is the first AI to judge a beauty contest, in which it chose predominantly white faces as winners. Or the

LinkedIn search engine that suggests a similar looking masculine name when one tries to search for a contact with a feminine name.

So, what can we do? First off, we can be more mindful of the data we are using to train our AI. Paying close attention to details such as where the data comes from and what other biases and factors may shape and form said data can tell us many important things. We can also make an active effort to reduce biases within AI by joining or forming groups such as Microsoft's FATE (Fairness, Accountability, Transparency and Ethics in AI). We can also form and support groups and initiatives that encourage those of marginalized groups to join the field of AI. Furthermore, we can put an emphasis on developing explainable AI. There is already a vast amount of confusion and potential for slips with training data alone; adding more confusion with "black box" only exacerbates the problem and makes it more difficult to identify the source of the problem with biased AI.

With AI being implemented more frequently to help predict various elements from many facets of daily life, from loans to health care to the prison system, it is more important now than ever to understand how AI can inflate and exacerbate biases that are already present. It is not only our job, but our responsibility to understand how these biases may affect the lives of many and, hopefully, devise ways to adjust for these biases and skewed results accordingly.

“The worry is if we don't get this right, we could be making wrong decisions that have critical consequences to someone's life, health or financial stability,” says Jeannette Wing, director of Columbia University's Data Sciences Institute.

The technology we develop can have a vast impact on many lives. It has the potential to become the way of the future. Shouldn't we take the time to assure to the best of our ability that this technology doesn't further hinder, but rather facilitates the success of those who need it most?

Feel free to share your thoughts below. Should we be concerned about biased AI? What ways should we focus on diminishing this bias? Is AI bias shaped more from data or the AI itself? Would AI sentience/awareness affect this issue and our response to it?

If you would like to read more about Microsoft's chatbot, Tay, you can do so

here.

If you would like to read more about Compas, click

here.

Here you can read about the LinkedIn search engine.

You can read about AI bias and possible responses more in depth

here.