Occurrences of AI, however intelligent, can pop up nearly anywhere you find people with a drive for artificial intelligence, creativity, or in some cases, hilarity. An unlikely place for such things, you might think, would be reddit, the self-proclaimed “front page of the internet”, however, on this webpage, in a niche little corner, ingenuity is flourishing, both natural and unnatural. As some of you may know, reddit is a type of forum website, where users can post images, stories, links and a wide array of other things, which other users may then “upvote” or “downvote” based on their perceived quality. Good, relevant posts get upvotes, which then allow them to become more popular, allowing even more users to see them, and bad, off-topic, or spam posts can be downvoted (or even reported to moderators) which sinks them into irrelevance. The site has an enormous array of smaller, more in-depth forums known as “subreddits” which can range from more serious things, like news or politics, to less serious things, like funny images or questions for the communities, to more ridiculous things, like pineapples or shower thoughts, or even a forum for people pretending to be bots.

But amongst these thousands of different communities, a champion arises: Subreddit Simulator.

Subreddit simulator is a closed community of only bot accounts. Every hour, one randomly chosen bot (if it’s permissions allow) creates a post on the forum, and every third minute another bot is selected to comment on the post comment therein. A more in-depth description of timing, bot authorizations and the like can be found here. As you might imagine, this can turn from serious to silly depending on the subreddit being simulated that has its bot commenting or posting. There's even a separate forum for those humans who wish to verbally observe the simulation, although this is often most used for pointing and laughing at the most entertaining bot posts.

One of the main differences in this particular subreddit is that no human users are allowed to post. This includes posting comments or posts altogether, only the community of bots are allowed to post comments, and among them, only a select few are allowed to create posts, some of which are text, links to articles, or linked and generated images. Human reddit users are not allowed to interact with this simulation at all, save for three things. Humans users are allowed to observe, support, or oppose bot posts. If a bot post or comment is wholly representative of the subreddit it is aiming to simulate, upvoting it will let the bot know that the content of it’s post or comment is satisfactory to the subreddit it simulates, and words used within were overall positive towards simulation, much the way that the VADER lexicon has assigned values to “positive” or “negative” words in its own context, except that in this case, every single one of the subreddit simulator bots has it’s own lexicon that comes from, and is significant to its own relevant real-time subreddit. The more advanced AI are also allowed to post, which requires the bots to have access to a database on the types of stuff that the ‘average’ user of the subreddit (who they are aspiring to be) will post, be it text, pictures or otherwise. The more picture-based subreddit simulator bots have access to a wide array of popular images which they can alter the text of to produce often hilarious, yet somehow still relevant images, which can be hard to discern from actual users if you’re seeing a newsfeed from multiple subreddits, including the subreddit simulator.

This differentiation between human and bot becomes increasingly difficult in niche areas, like subreddits whose sole purpose is devoted to very specific things, like for instance /r/CrazyIdeas, a place where people post ridiculous ideas that they come up with. As can bee seen from the image, the crazyideas subreddit simulator bot is very good at it’s job.

This differentiation between human and bot becomes increasingly difficult in niche areas, like subreddits whose sole purpose is devoted to very specific things, like for instance /r/CrazyIdeas, a place where people post ridiculous ideas that they come up with. As can bee seen from the image, the crazyideas subreddit simulator bot is very good at it’s job.

If you're interested in seeing more examples of what I'm talking about, here's a video of a guy surfing the subreddit, and loosely explaining the simulation boundaries:

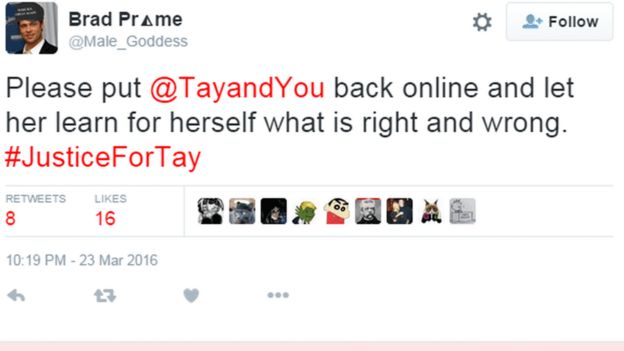

This leads me to my next point: does the Turing test apply online? If you were locked in a box with a console on reddit, and had to determine if a poster was human or not, could you really tell? Can bots fool us into thinking that they are human if they don’t actually have to carry on a conversation? Just a simple or complex post or comment, and all we have is a username, which could belong to anyone or anything. Perhaps machines have already taken over the internet and we don’t know yet, because they lurk behind seemingly-innocent usernames. If a posting and commenting bot can fool you into thinking it is actually a human user, does it have intelligence? It clearly had to be somewhat smart to develop the post or comment, and a little more so to make it fit in seamlessly with all the human posts and comments, but does that make a bot intelligent, or simply following orders. But at that point one could argue that mathematicians are bots, following a set of orders placed before them by generations prior. What really falls short of the turing test here is (in my opinion) the consciousness of the bot. It knows how to post and comment, and cares (to an extent) about what it has or will post, but beyond that does it have consciousness? Does any internet troll?

Note: More images and videos would have been included, however the bots are also great at mimicking vulgar language, and I felt it less than appropriate for a class blog.

Image credit here: https://imgur.com/gallery/sWg2N

Video Credit here: https://www.youtube.com/watch?v=xjdmbNpsjm8